The Azure Resource Manager (ARM) exposes many capabilities you can use to work with the Azure platform. One such capability is an API that allows client systems to send ARM templates to API.These ARM templates contain JSON‐structured configurations that instruct the ARM API to provision and configure Azure products, features, and services. Instead of performing the provisioning and configuration using the Azure portal, you could instead use an ARM template sent to the ARM API. The main page includes an Export ARM Template tile. Consider exporting the ARM template and take a look at the JSON files. You can easily deploy the same configuration into a different region with minimal changes to the exported ARM template.

Author

The Author hub contains two features: triggers and global parameters.

TRIGGERS

Triggers are a means for starting the orchestration and execution of your pipelines. As in Azure Synapse Analytics, there are four types of triggers: scheduled, tumbling window, storage events, and customer events.

GLOBAL PARAMETERS

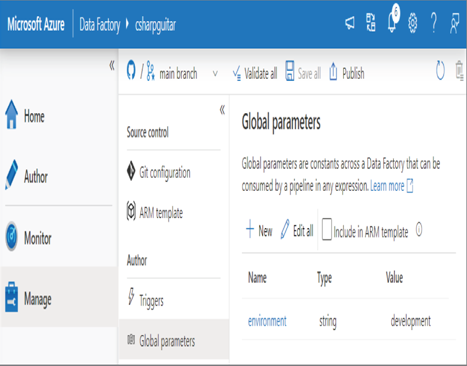

Global parameters enable you to pass parameters between different steps in a pipeline. Consider that you have development, testing, and production data analytics environments. It takes some serious configuration management techniques to keep all the changes aligned so the changes can flow through the different stages of testing. One way to make this easier is to have a global identifier indicating which environment the code is currently running on. This is important because you do not want your development code updating data on the production business‐ready data. Therefore, you can pass a variable named environment, with a value of either development, testing, or production, and use that to determine which data source the pipeline runs against (see Figure 3.62).

FIGUER 3.62 Azure Data Factory Manage global parameters

Access the environment variable using the following code clicking via the JSON configuration file for the given pipeline:

@pipeline().globalparameters.evironment

You can modify the JSON configuration by selecting the braces ({}) on the right side of the Pipeline page.

Security

Security, as always, should be your top priority. Consider consulting an experienced security expert to help you determine the best solution for your scenario.

CUSTOMER MANAGED KEY

The customer managed key was mentioned shortly after Exercise 3.10 regarding the Enable Encryption Using a Customer Managed Key check box. Had you provided an Azure Key Vault key and managed identity, they would have appeared here. If you change your mind and want to add a managed key after the initial provision, you can do so on this page.

CREDENTIALS

The Credentials page enables you to create either a service principle or a user‐assigned managed identity. These are credentials that give the Azure product an identity that can be used for granting access to other resources.

MANAGED PRIVATE ENDPOINTS.JS

Private endpoints were discussed in the “Managed Private Endpoints” subsection of the “Azure Synapse Analytics” section. Review that section, as the concept is applicable in this context as well.

Author

Authoring in this context is another word for developing and is where you will find some tools for data ingestion and transformation.

Dataset

A dataset in Azure Data Factory is the same as in Azure Synapse Analytics. In ADF or ASA is where you specifically identify the data format, which linked service to use, and the actual set of data to be ingested. Perform Exercise 3.12 to create a dataset in Azure Data Factory.